Our project was to generate emoticons based on a user's expression in real-time video. We did this by using a particle filter, where particles were ellipses. We used gradients and color histograms to generate particle weights, and a median filter to find a user's face in a video frame. We used an eigenspace, created using SVD on training data, to do exemplar-based matching of novel expressions to our database.

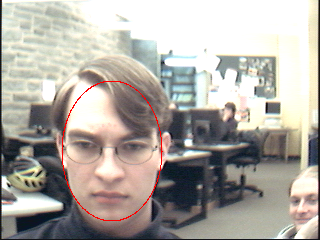

The first task in our project was to find the face in the image. We accomplished this task using a particle filter of ellipses of fixed aspect ratio. A particle's fitness was initially evaluated based on two measurements, color and gradient at points along the edge of the ellipse, both suggested by Stan Birchfield in [1].

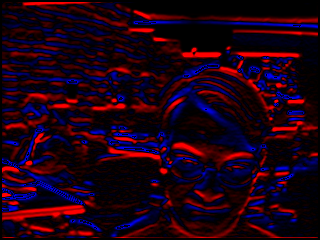

The first is a fitness component is based on the colors present inside the ellipse of the particle. Matching is based on simple histogram intersection of the histogram of colors in the particle (in the r-g color space, quantized to an 8x8 histogram) and the "reference histogram," created offline from a processed image of the face. The processed image ensures that the reference histogram only contains the colors present in the face area, which includes some hair so that the particle is less likely to get stuck on a solid, skin-colored surface.

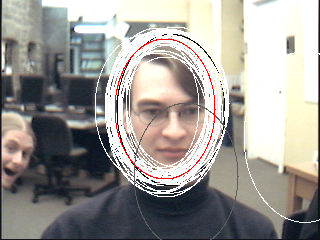

The second component considers the gradient of the image along the edge of an elliptical particle. The image is preprocessed to determine the gradient in the x and y directions using 5x5 Sobel operators. A directional gradient is calculated for points on the edge of the ellipse, in the direction of the normal to the ellipse at each point. It was thought ellipses with high gradient values would be those that had many points on the outline of a person's face [1], but we found that this was not the case (perhaps because human faces are not perfect ellipses).

The final weight of the particle, because the gradient measure proved so unhelpful, is just the weight of the color matching. Without the gradient feature to help maintain the size of the particles, we were forced to impose a minimum size on particles, since they tended to shrink on a small region of skin once they converged on a face.

To limit the search space of the particle filter, we first captured a static background image (with no face in the image), and then determined pixels of interest by comparing each frame of live video with this background image. If the pixel intensity varied more than a certain threshold from the value in the background image, then we considered it as a potential part of a face in the image; if not, we ignored it in the color-matching routine.

Finally, to identify the probable location of the face in the image, we used a median filter on each of the two structural features of the particle, location and size, for each of the fittest 5 particles. We found that simply averaging the top-weighted ellipses did not eliminate salt-and-pepper noise resulting from false-positives distant from the face.

We interpreted emotion using principle component analysis. We first captured a number of images of the user's face in each of the expressions our program can recognize (smiling, frowning, winking and a neutral face). These images are formed by downsampling the interior region of the ellipse returned by the above face tracker. We used SVD to determine the singular values with the most significant contribution to variation in the image set, and built an eigenspace using the eigenvectors associated with the top 10 singular values.

We projected the new images into this eigenspace, and compared the

coordinates of the novel image to the coordinates of the exemplars. We

chose the emotion of the novel image by picking the emotion of the

closest exemplar, using a simple L1 norm measurement. Finally, the

program outputs an emoticon (one of :-),

:-(, ;-) or :-| ) to represent

the user's emotion.

Because we are using a very simple distance measurement to do matching in the eigenspace, our system is not terribly robust. However, as long as the user's expressions are distinctive and unique, the system actually performs quite well. It has difficulty when two emotions have similar features (ie a smile and a wink with the same mouth, differeing only in the eyes), but if the user exaggerates expressions, the system can usually identify the emotion correctly. We suspect that a more complex matching algorithm (for example an aritificial neural network, or k-nearest-neighbors matching) would yeild better results on more subtle facial expressions.

As a side note, we managed to develop a fairly robust face tracker based solely on color information, thanks to the wonders of particle filters and background subtraction. Because it uses only color information, however, it is easily confused by multiple skin-colored regions in the field of view that are not part of the background. This problem could be rectified by building a motion model into the particles, or by adding a fitness measure that is robust where simple color matching fails.

[1] Elliptical Head Tracking Using Intensity Gradients and Color Histograms. Stan Birchfield. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Santa Barbara, California, pages 232-237, June 1998.